Machine learning systems are all around us. They help personalize what we see online, provide recommendations, and even power the decision-making behind many critical systems like healthcare and finance. Yet, with all their promise, there’s a looming concern that’s becoming harder to ignore: the rise of adversarial AI.

When you hear “adversarial AI,” it might sound like some futuristic, sci-fi villain. But, the reality is much closer to home.

It’s about malicious attempts to manipulate machine learning models to behave in unintended ways. Let’s break down what’s going on with adversarial AI, why it matters, and what can be done to combat it.

Table of Contents

ToggleWhat is Adversarial AI?

In simple terms, adversarial AI refers to attempts to trick a machine learning model by feeding it misleading or manipulated data. Imagine showing a picture of a cat to a facial recognition system, and it identifies it as a human face—this is the kind of thing adversarial attacks can do.

In many cases, the manipulated inputs are so subtle that the human eye might not even notice the difference. But for a machine learning model, those tiny changes can cause significant confusion.

According to Wiz, adversarial AI attacks come in different forms, but one of the most well-known is the adversarial example. It’s a specially crafted input designed to mislead a machine-learning algorithm into making an incorrect prediction or decision.

Types of Adversarial Attacks

Adversarial AI techniques vary in complexity and purpose. Some are aimed at disrupting services, while others have more severe implications, like breaching security protocols. Here are a few common types:

Type of Attack

Description

Evasion attacks

Focus on bypassing a machine learning model’s detection by tweaking inputs, like misclassifying an image.

Poisoning attacks

Attackers tamper with the training data to insert malicious data, causing the model to learn incorrect patterns.

Inference attacks

Aim to extract sensitive information from the model, potentially exposing private or proprietary data.

Why Adversarial AI is Such a Big Deal

You might be thinking: “Okay, so what if a machine learning model misclassifies a cat as a dog?” In many cases, the stakes can be low, but in others, the consequences could be far more dangerous.

Think about self-driving cars. They rely heavily on machine learning to make real-time decisions about their environment. If an attacker can trick the car’s sensors into misinterpreting road signs or pedestrians, it could lead to life-threatening accidents.

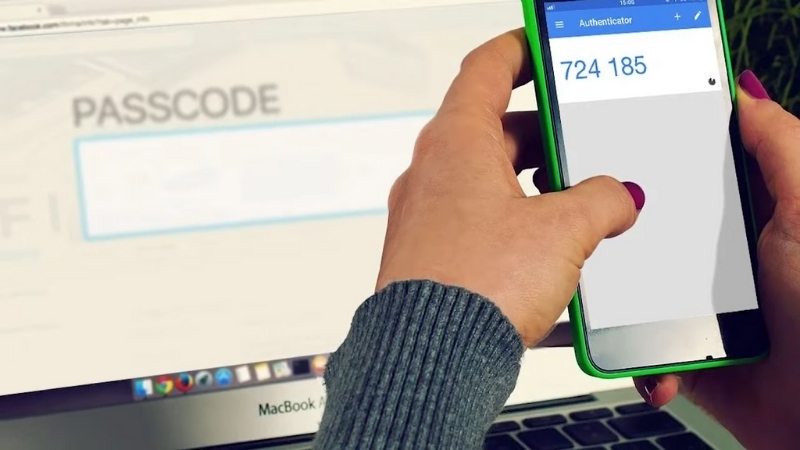

Another area where the stakes are high is cybersecurity. Many systems use machine learning to detect malware or intrusions. An adversarial attack could allow hackers to slip through the cracks by tricking the model into thinking their malicious software is harmless.

On top of that, machine learning is increasingly used in decision-making for finance, healthcare, and even criminal justice. Misleading these systems could have significant real-world consequences—from wrongly denying loans to misidentifying suspects.

The Silent Nature of Adversarial AI

One of the scariest things about adversarial AI is how silent and invisible these attacks can be. In some cases, the changes made to trick the model are almost imperceptible to humans.

This subtlety makes defending against adversarial AI especially hard. How do you stop something that’s almost invisible to the naked eye but perfectly engineered to fool machines?

How Machine Learning Models Fall for Adversarial AI

You might wonder why machine learning models can be so easily tricked. The answer lies in how they “learn.” Machine learning models aren’t perfect. They are trained on large amounts of data, and through that training, they develop patterns and predictions based on what they’ve seen.

But machine learning models don’t “see” the world like humans do. Instead of interpreting images as objects or faces, they analyze data points, looking for patterns. This makes them vulnerable to carefully crafted inputs that are designed to disrupt those patterns.

Adversarial examples take advantage of this flaw. By finding weak spots in a model’s understanding, attackers can exploit those vulnerabilities to make the model give incorrect results. It’s like hacking the brain of a machine by feeding it carefully disguised misinformation.

So, What Can We Do About It?

As adversarial AI techniques evolve, so do the defenses against them. Researchers and companies are working hard to develop ways to detect and prevent these attacks. But like many things in cybersecurity, it’s a constant game of cat and mouse.

Several strategies are being developed to protect machine learning systems from adversarial threats:

Technique

Description

Adversarial training

Involves training the model with adversarial examples so it can recognize and defend against them. Prepares the model for real-world attacks.

Robustness testing

Models undergo rigorous testing to identify weak points and improve resistance to adversarial manipulation, similar to security testing in software.

Defensive distillation

Aims to make the model’s decision-making process less sensitive to small input changes, making it harder for attackers to trick the system.

Explainable AI

Makes machine learning models more transparent, allowing us to better understand their decisions and detect when something seems off.

The challenge here is that attackers are constantly coming up with new ways to bypass defenses. It’s an ongoing race, and as one side develops new methods of protection, the other side is working just as hard to find new ways to break through.

The Limitations of Current Defenses

Here’s why defending against adversarial AI is challenging:

- Models Generalize: Machine learning models are designed to generalize from the data they’re trained on. However, adversarial examples exploit this by falling into gray areas that the model doesn’t recognize as harmful.

- Invisible Attacks: Many adversarial manipulations are so subtle that humans can’t detect them. If we can’t see the problem, it becomes difficult to design foolproof defenses.

- Time and Resources: While larger organizations may invest in adversarial AI defenses, smaller companies might not have the resources or expertise to keep up. This uneven landscape can make certain industries more vulnerable than others.

The Bottom Line

Adversarial AI is a rising challenge in the world of machine learning. Its ability to silently manipulate sophisticated systems makes it a serious concern for industries across the board.

From self-driving cars to cybersecurity and beyond, the potential consequences of these attacks can be far-reaching and, in some cases, life-threatening.